Foxconn announced the first major language model (LLM) called Foxbrain. The company announced that it plans to use this model to improve production and supply chain management.

What does Foxconn Foxbrain offer?

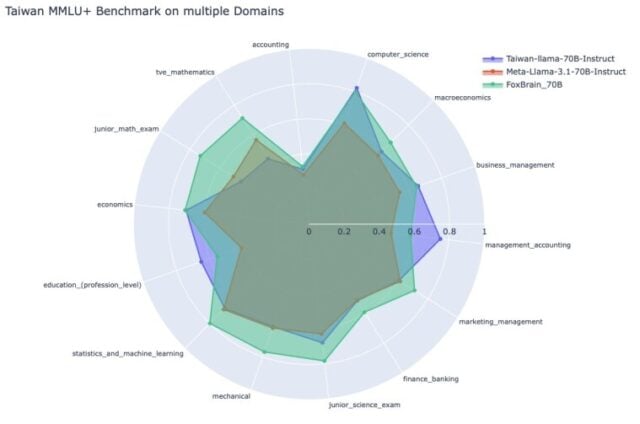

In a statement, the company said Foxbrain was trained using only 120 NVIDIA H100 GPUs. LLM is based on the architecture of 70B parameter 3.1 using distillation. The distillation of a model includes the use of a main model and the training of the “sub -” model according to the answers of the model. Foxconn also acknowledged that LLM was not as good as China’s Deepseek distillation model, but general performance is very close to world -class standards.

Hon Hai Research Institute Director of Artificial Intelligence Research Center Yung-hui Li said: “In recent months, the deepening of reasoning capabilities and the efficient use of the GPUs has become increasingly developing in the field of artificial intelligence. Our Foxbrain model has adopted a very efficient training strategy that focuses on optimizing the training process instead of blindly accumulating the calculation power.

Thanks to carefully designed training methods and resource optimization, we successfully created a local artificial intelligence model with strong reasoning capabilities. ”

With 120 H100 GPUs, Foxbrain was scaled with NVIDIA’s Quantum-2 Infiniband network and the training was completed in about four weeks (the total calculation cost of 2.688 GPU days). With a length of a context window of 128 K tokenn, Foxconn was able to produce 98 billion tokens high -quality pre -education data in the traditional Chinese.

NVIDIA also provided Foxconn’s Taipei-1 super computer to complete the preliminary training of the model. Foxconn said FoxBrain would be a “important engine üzere to raise three major platforms, smart home and smart city.

Follow our site to read more technology news!